Read about Unlocking Data Excellence and Compliance with AWS Compute & High Performance Computing for Digital Transformation....

Learn about the benefits and difficulties of combining SAP Support Services with CPI integration for our client offered by Peritos....

The project explains how Peritos assisted AmityWA in addressing its data challenges by leveraging SAP Support Services....

Find out the features & challenges of implementing Image Processing Border Detection- custom web app development for Cumuluspro....

This case study explores the features & challenges of migrating from Azure Dataverse to Azure blob Storage under Microsoft Dynamics Support....

The project explains how the AWS IoT integration provides ready access to the inventory levels and manages vital parameters in a better way....

This case study explores the features & challenges of migrating from Azure Dataverse to Azure blob Storage under Microsoft Dynamics Support....

Learn how to migrate to the Azure cloud platform by website development & hosting from your existing cloud solution. Read more about it now....

This case study explores the features & challenges of migrating from Azure Dataverse to Azure blob Storage under Microsoft Dynamics Support....

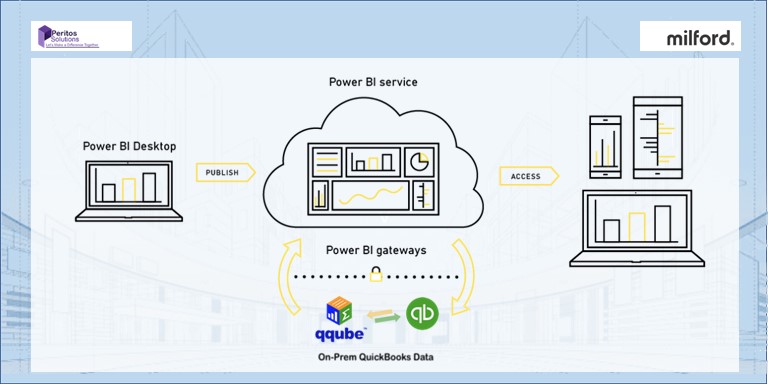

This case study probes how we successfully incorporated Power BI Quickbook Integration for a seamless user experience for our client....