Read about Peritos Enhances AWS Control Tower Setup: Streamlined Governance, Compliance, and Cost Control for Enhanced Cloud Infrastructure....

The project explores Managing AWS Environment for Efficient Operations: Peritos optimizes resource allocation and cost control for digital products....

Read about Unlocking Data Excellence and Compliance with AWS Compute & High Performance Computing for Digital Transformation....

Read the project details which talks about the set up of AWS environment for the client. We used Control Tower, Adhering to AWS NIST Compliance for multi account, Multi environment use...

This case study explores the features and challenges of installing SAP Implementation for Enterprise like Afghanistan Holding Group...

The project explores using AWS for custom app development using ESRI ArcGIS to read data and generate Hazard reports for a property that the user can search using Google Maps...

The project explores the features, challenges, and implementation of the Cumulus Pro-Digital Receipt & Invoice Management Application...

This project shows how we created a custom LinkedIn app for a digital influence agency. It is an app that helps to manage LinkedIn leads...

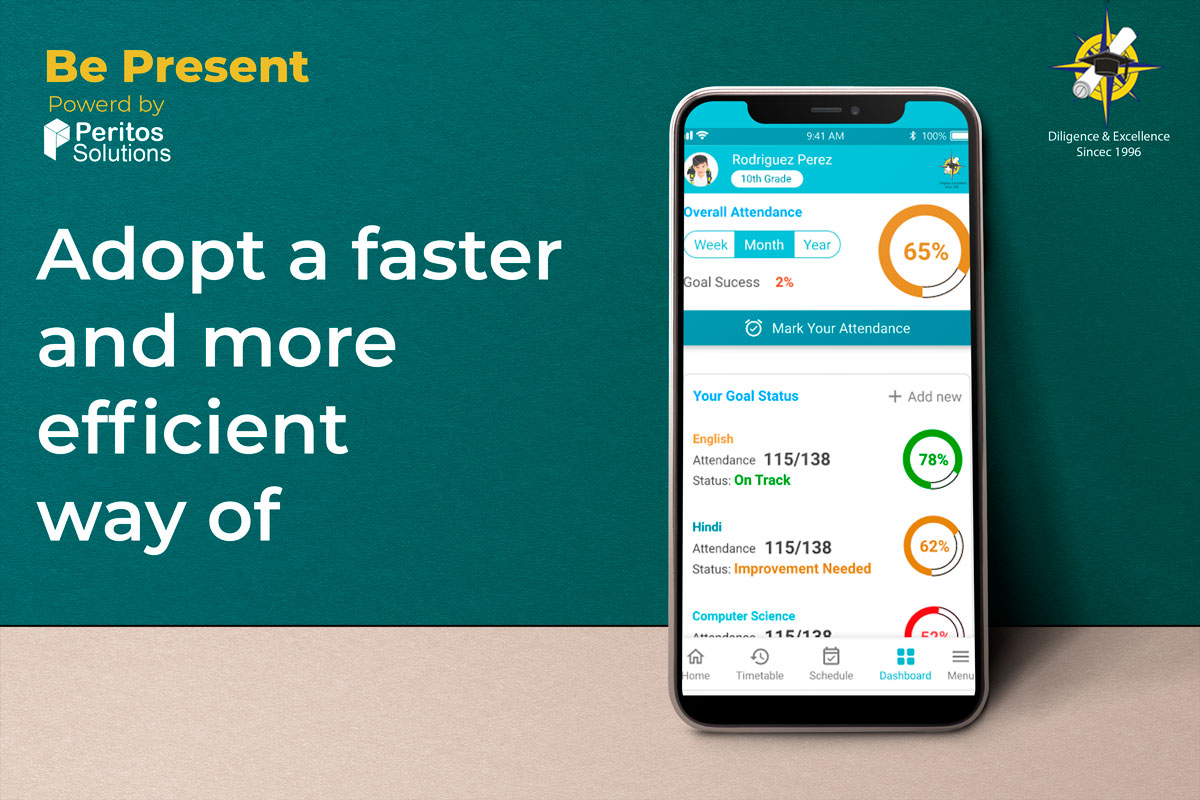

This case study explores how Online Attendance Management software was built from scratch for a professional institute....